21 Composing with Generative AI

Overview

In this chapter, you will be introduced to the ways that instructors and researchers think about integrating AI into educational tasks. One such model is the AI Assessment Scale, which provides categories to classify different levels of AI usage. Another is the Centaurs, Cyborgs, and Resisters model, which was adapted from Ethan Mollick to describe overall attitudes toward the use of AI. You’ll also learn best practices for addressing academic integrity issues, such as being accused of unauthorized or inappropriate AI use.

Learning Outcomes

By the end of this chapter, you’ll be able to:

- Describe and give examples of different levels of AI use

- Differentiate between AI usage styles

- Apply principles for using AI in your academic work, including the choice not to use AI

It’s easy to imagine how generative AI technology could contribute to our rhetorical processes, or even change how we think of rhetoric and communication. For example, we may soon see accessible models fine-tuned on personalized datasets (e.g., our own emails), which might help language models better mimic the voice of the writer instead of producing the generic, AI-influenced style of writing that has become relatively identifiable to some teachers.

As language models begin to link applications we use on a daily basis through a single interface, AI plug-ins and apps will extend the capabilities of LLMs for use in search, as well as a host of other writing tasks. While many writers have been using ChatGPT as a standalone application, Google and Microsoft have begun embedding language models in their word processing systems and office software — a feature that will soon be rolled out on a massive scale. Our writing environments will inevitably be shaped by these AI integrations, but it’s unclear what effect this integration will have on our writing or writing processes.

Some specific ways that you might use generative AI as part of your writing process include:

- Generating a list of potential topics or claims for a research project

- Compiling a summary of an article or literature review based on a dataset of scholarly articles

- Editing

- Drafting new versions of writing that you do regularly, such as memos or emails

At the same time, however, remember that generative AI has significant limitations that can create problems writers need to account for.

Principles for Using AI

No matter what your career interests are, generative AI and machine learning are becoming everyday tools. Understanding the basics of how they work — as well as their weaknesses and limitations — is important, even if you choose not to use them. In his book Co-Intelligence, Ethan Mollick presents guiding principles that can help you navigate AI effectively in your work life. Those who wish to mix these tools into their workflows may find them useful.

Principle 1: Invite AI to the Table

AI fluency is becoming an increasingly valuable skill in the workplace. By learning to use these tools now, you can set yourself up to adjust seamlessly as they evolve and become more powerful. One approach for integrating AI into your workflow is to simply ask, “Can I use AI here?” in all situations.

Principle 2: Be the Human in the Loop

In Co-Intelligence, Mollick emphasizes the importance of “being the human in the loop.” This means actively checking AI’s outputs for accuracy, maintaining ethical standards, and applying your own judgment. Unlike, say, a simple calculator, you will need to bring oversight, critical thinking, and responsibility to your collaboration with AI.

For students, remaining the human in the loop means you also need to bring a sufficient level of knowledge to critically evaluate AI outputs. You will likely need to build this knowledge in ways that are unassisted by AI. In short, you should consider using AI when faced with a problem or challenge, but always review its output carefully to ensure accuracy.

The AI Assessment Scale

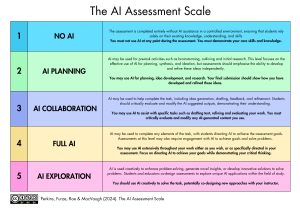

As generative AI becomes more common in education settings, instructors and students need a common way to talk about how these tools are used. Researchers Mike Perkins, Leon Furze, Jasper Roe, and Jason MacVaugh developed the AI Assessment Scale in 2023 and updated it in 2024 to provide a nuanced framework for integrating AI into education.

Figure 21.1. The AI Assessment Scale. (AI_Assessment_Scale_Infographic_Accessible [New Tab])

Understanding the Levels

Level 1: No AI

The assessment is completed entirely without AI assistance in a controlled environment, ensuring that students rely solely on their existing knowledge, understanding, and skills.

Example: You must not use AI at any point during the assessment. You must demonstrate your core skills and knowledge.

Level 2: AI Planning

AI may be used for pre-task activities such as brainstorming, outlining, and initial research. This level focuses on the effective use of AI for planning, synthesis, and ideation. Assessments should emphasize the ability to develop and refine these ideas independently.

Example: You may use AI for planning, idea development, and research. Your final submission should show how you have developed and refined these ideas.

Level 3: AI Collaboration

AI may be used to help complete the task, including idea generation, drafting, feedback, and refinement. Students should critically evaluate and modify the AI-suggested outputs, demonstrating their understanding.

Example: You may use AI to assist with specific tasks such as drafting text, as well as refining and evaluating your work. You must critically evaluate and modify any AI-generated content you use.

Level 4: Full AI

AI may be used to complete any elements of the task, with students directing AI to achieve the assessment goals. Assessments at this level may also require engagement with AI to achieve goals and solve problems.

Example: You may use AI extensively throughout your work either as you wish, or as specifically directed in your assessment. Focus on directing AI to achieve your goals while demonstrating your critical thinking.

Level 5: AI Exploration

AI is used creatively to enhance problem-solving, generate novel insights, or develop innovative solutions to problems. Students and educators co-design assessments to explore unique AI applications within the field of study.

Example: You should use AI creatively to solve the task, potentially co-designing new approaches with your instructor.

Reflection

Think about a project that you are currently working on, and then answer the following questions:

- What would it look like to integrate AI at a given level for this project?

- What might change about your specific work tasks? (For example, how would you use AI at the planning stage vs. full AI use?)

- Which of these levels best matches how you have used AI for school so far? Does your level of AI use change based on the class or the instructor?

Centaurs, Cyborgs, and Resisters: Understanding Your AI Style

How you use AI may depend on your comfort level. Some people blend AI seamlessly into their work, while others prefer a clear boundary between human-created and AI-generated content. Some may take a more oppositional stance towards these tools. In his book Co-Intelligence, Mollick uses the metaphors of centaurs and cyborgs to describe these approaches. We’re adding the third category, resisters.

Centaurs

A centaur’s approach has clear lines between human and machine tasks — like the mythical centaur, which has the upper body of a human and the lower body of a horse. Centaurs divide tasks strategically: The human handles what they’re best at, while AI manages other parts. Here’s Mollick’s example: you might use your expertise in statistics to choose the best model for analyzing your data, but then ask AI to generate a variety of interactive graphs. AI becomes, for a centaur, a tool for specific tasks within their workflow.

Cyborgs

Cyborgs deeply integrate their work with AI. They don’t just delegate tasks — they blend their efforts with AI, constantly moving back and forth between human and machine. A cyborg approach might look like writing part of a paragraph, asking AI to suggest how to complete it, and revising the AI’s suggestion to match your style. Cyborgs may be more likely to violate a course’s AI policy, so be aware of your instructor’s preferences.

Resisters: The Diogenes Approach

Mollick does not suggest this third option, but we find that it’s important to recognize that some students and professionals feel deeply uncomfortable with even the centaur approach, and our institution and faculty will support this preference as well. Not everyone will embrace AI. Some may prefer to actively resist its influence, raising critical awareness about its limitations and risks. Like the ancient Greek philosopher Diogenes [Website], who made challenging cultural norms his life’s work, you might focus on warning others about AI’s potential downsides and advocating for caution in its use. Of course, those taking this stance should understand the tool as well as centaurs and cyborgs. In fact, resisters may need to study AI tools even more deliberately.

What If I Don’t Use Generative AI?

There are plenty of ethical concerns associated with generative artificial intelligence, and we have found that students who are educated about these concerns sometimes prefer not to use or interact with generative AI tools. Maybe you are one of those students.

Some students are rightly uncomfortable with using AI. What should you do if a teacher requires it? First, know that AI is a developing technology, and the ways that AI can be implemented (or avoided) in a classroom vary widely. Keep in mind the instructor’s intent. AI is increasingly in demand in the workplace; because higher education is expected to justify how courses foster “durable skills” that translate to professional life, AI fluency will be difficult for faculty to carve out of their syllabi. Alternatively, your instructor may include AI assignments to foster awareness of its limits and pitfalls. If you want to resist or critically engage with AI, your ethical stance may be perfectly compatible with using it in a controlled environment.

If you object to the use of AI, but want to take a class that requires it, establish a line of communication early on to see if you can complete alternate assignments, such as arguments that engage critically with the exercise and provide explanations for how the technology may be limited or unethical. Faculty who allow opt-out may provide sample chatbot conversations so that you don’t have to engage with it directly.

You should also check which platform(s) the instructor expects students to use in a given course. Does the institution provide safe and secure access to something like Microsoft Co-Pilot or ChatGPT for Enterprise? Are they working with a company that uses APIs from Anthropic, OpenAI, or another company within a contained environment that doesn’t share your data? If not, the instructor may require you to sign up for a service that violates basic expectations around privacy. If the nature of the course content involves highly personal work, it’s okay to speak up about this issue. Alternately, you can find ways to transfer to another section early in the semester if the professor is unable to resolve your objections.

When You Are Unfairly Accused of Unauthorized AI Use

What happens if you’re unfairly accused of using generative AI? Unfortunately, as of this writing in 2024, such accusations are extremely common. It will be helpful to know that a high percentage of faculty are just trying to figure out this technology themselves. They’re still learning about AI — just like you — and they’re applying an older understanding of plagiarism to a new technology. Most higher education institutions did not update their academic integrity policies to include artificial intelligence until 2023. Until most faculty have fully wrapped their heads around how to teach and assess student work in a way that “fits” with how students are engaging with a course, it will help to keep that in mind.

So, how can you deal with an accusation like this? We have seen that, when a student is accused and receives a zero for an assignment (whether it’s a low-stakes discussion board or a high-stakes paper), it’s extremely important to continue the conversation and ask to meet with the faculty member to demonstrate your proficiency without the help of generative AI. Rather than lash out in anger, show them you’re eager to demonstrate that you’re engaging with the course content. Set up a meeting with the professor as soon after the accusation as you can to calmly communicate your side of the story.

It’s also essential that you understand your institution’s protocols around academic integrity violations. If a student receives a “0” for an assignment because the instructor believes it’s merely AI-generated text, the instructor must follow institutional protocol by notifying academic integrity officers, usually by submitting an academic integrity violation report. Students can and should challenge this if the accusation is unfounded. When reporting a student, faculty must be able to demonstrate “with reasonable certainty” that the student has committed a violation. It doesn’t have to be 100% certainty, but rather something they could argue successfully in an academic integrity hearing.

Reflection

The USF Honor Code [Website] details the standards for academic integrity that apply to all students (undergraduate and graduate) in the College of Arts and Sciences, the School of Education, the School of Management, and the School of Nursing and Health Professions.

Additionally, USF offers faculty three syllabus statement options [Website] that they can choose from to establish the use of generative AI tools in their courses.

- Which option(s) have you seen most commonly in your courses?

When you meet with the instructor, ask why they think your submission was AI-generated. As mentioned above, AI checkers are highly flawed. AI cannot be used to detect AI with certainty. If communication breaks down and you have to formally appeal the grade, make sure you are aware of institutional appeal deadlines (usually available in your college catalog). Do not hesitate to appeal the grade if your instructor is unwilling to work with you after that initial meeting.

Finally, this entire scenario demonstrates that it’s often helpful to keep a digital record of your work. As we mentioned previously, tracking your version history can be one way to do this. Google Docs and Microsoft Word have histories with timestamps that show the progress of your work. If you’re particularly concerned, you can download Chrome extensions, like Cursive [Website], that record your labor in a more granular way. It’s good practice to write first in a designated writing application like Word or Google Docs, and then copy your work into the LMS. That way, you can prove your labor.

If you are, in fact, guilty of unethical AI use, the best thing to do is simply to ask for an opportunity to redo the assignment or complete an oral assessment. Know that most faculty truly do want to work with you — if they see a good-faith effort to re-engage, many will accept a redo or alternate assessment.

Reflection

Write a reflective paragraph about your experience and feelings with writing in school. Include the feedback you’ve received (both positive and negative) and any particularly memorable moments. Then, put your paragraph into an LLM like ChatGPT and ask it to apply those feedback suggestions (like “more cohesive” or “more descriptive vocabulary”).

- What do you notice about the LLM’s revised version?

- Does it still reflect your personal voice?

- What’s gained and what’s lost in this revision?

Conclusion

In the next chapter, we will look at how to cite and acknowledge AI use. But as an instructor, I am also curious about why students choose not to use AI tools. For this reason, I request that my students include a “no-AI” acknowledgment statement when they choose not to use AI. Here is the template:

I attest that I did not use any generative AI tools in creating this assignment. I chose not to use AI because:

The important point to remember here is that these tools can be helpful when used correctly, but also have their limitations. Understanding when to use — or not use — AI is becoming an increasingly important skill in both academic and professional settings.

Test Your Knowledge

Further Reading and Resources

- “How to Use Rhetoric to Repurpose Content with ChatGPT” [Website]

- “What Is Digital Literacy?” [Website]

- “Is Genre Enough? A Theory of Genre Signaling as Generative AI Rhetoric” [Website]

- “AI Assessment Scale” [Website]

Resources for Instructors

- “Navigating the Generative AI Era: Introducing the AI Assessment Scale for Ethical GenAI Assessment” [Website]

- “AI and Academic Writing Analysis Project” [Website]

Works Cited

“Academic Integrity – Honor Code | MyUSF.” Usfca.edu, 2019, myusf.usfca.edu/academic-integrity/honor-code.

“AI Assessment Scale (AIAS).” AI Assessment Scale (AIAS), 2025, aiassessmentscale.com/.

Cummings, Lance. “How to Use Rhetoric to Repurpose Content with ChatGPT.” Isophist.com, Cyborgs Writing, 9 Oct. 2024, www.isophist.com/p/how-to-use-rhetoric-to-repurpose. Accessed 22 July 2025.

“Diogenes.” Wikipedia, 2 Mar. 2020, en.wikipedia.org/wiki/Diogenes.

Long, Liza. “What Is Digital Literacy?” Cyborgs and Centaurs: Academic Writing in the Age of Generative Artificial Intelligence, Pressbooks, 2025, cwi.pressbooks.pub/longenglish102/chapter/what-is-digital-literacy/. Accessed 22 July 2025.

Mollick, Ethan. Co-Intelligence: Living and Working with AI. Penguin, 2024.

Omizo, Ryan, and Bill Hart-Davidson. “Is Genre Enough? A Theory of Genre Signaling as Generative AI Rhetoric.” Rhetoric Society Quarterly, vol. 54, no. 3, May 2024, pp. 272–85, https://doi.org/10.1080/02773945.2024.2343615. Accessed 29 Oct. 2024.

Perkins, Mike, et al. “The AI Assessment Scale.” The AI Assessment Scale, 2024, https://aiassessmentscale.com/.

“Syllabus Statement Options for Generative AI | MyUSF.” Usfca.edu, 2024, myusf.usfca.edu/provost/SVPAA/policies-and-procedures/USF%20Syllabus%20Statement%20Options%20for%20Generative%20AI. Accessed 13 July 2025.

Attributions

This chapter was written and remixed by Phil Choong.

The “Principles for Using AI” section was adapted from “Principles for Using AI in the Workplace and the Classroom” by Joel Gladd and is licensed under CC BY-NC-SA 4.0.

The “Centaurs, Cyborgs, and Resisters: Understanding Your AI Style” section was adapted from “What’s Wrong with This Picture?” by Liza Long and is licensed under CC BY-NC-SA 4.0.

“The AI Assessment Scale” section was adapted from AI Assessment Scale by Mike Perkins, Leon Furze, Jasper Roe, and Jason MacVaugh and is licensed under CC BY-NC-SA 4.0.

The “What If I Don’t Use Generative AI?” and “Conclusion” sections were adapted from “What If I Don’t Use Generative AI?” by Liza Long and are licensed under CC BY-NC-SA 4.0.

Media Attributions

- The AI Assessment Scale © Mike Perkins, Leon Furze, Jasper Roe, and Jason MacVaugh is licensed under a CC BY-NC-SA (Attribution NonCommercial ShareAlike) license